AI Field Day: Why Storage Matters for AI

AI Field Day 2024

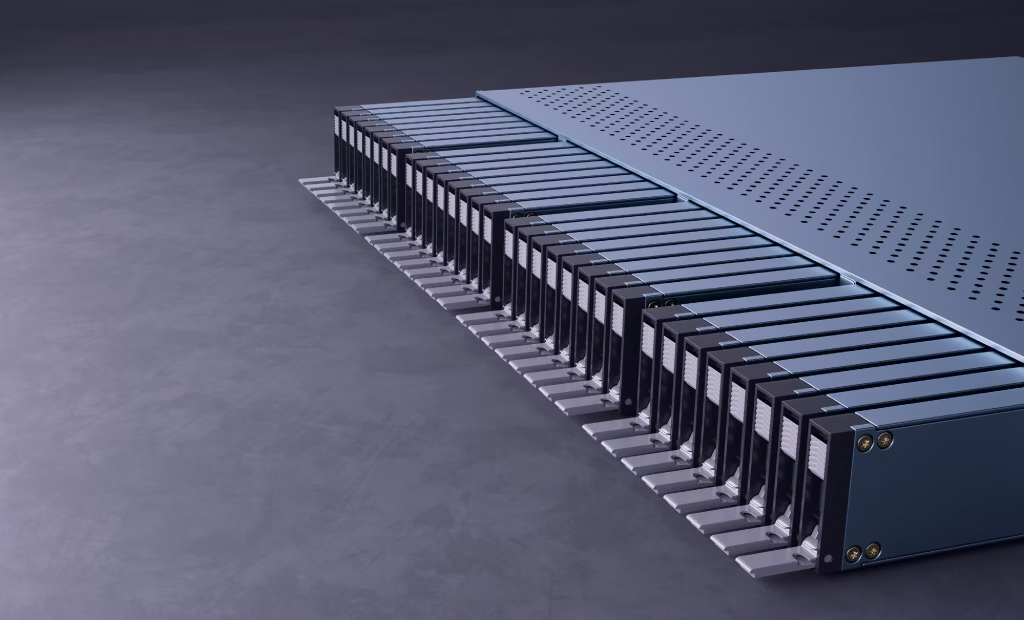

Learn how the Solidigm SSD portfolio is helping accelerate AI model development and deployment. As AI datasets and models grow, flash storage plays a critical role in improving time to market and cost and power efficiency.

Learn more about Solidigm SSDs and their role in storage for AI solutions here.

Video Transcript

Please note that this transcript has been edited for clarity and conciseness

Featuring Ace Stryker, Director of Market Development at Solidigm and Alan Bumgarner, Director of Strategic Planning for Storage Products at Solidigm.

Ace Stryker: We are from Solidigm, and we are here to talk to you about why storage matters for artificial intelligence workloads. So here are the topics that we're going to cover. We're going to tell you a little bit about who Solidigm is for those who are less familiar with us, what is our perspective on sort of the storage mission in AI, and what role do we as a storage company have to play in the ecosystem?

We'll talk about the market environment and, in particular, the storage opportunity as workloads grow, as architectures evolve, and so forth. There's lots of stuff we'll dig into there. Of course, we all think about compute first, right, when we think about hardware for AI. And we should, that comprises the bulk of the materials in an AI server. But what you'll learn today is that storage plays a more and more critical role as the industry matures and as workloads diversify. We'll talk about the ways in which that shows up.

We'll go through the actual steps of an AI workflow and talk you through the role of storage devices and what are the I/O demands generated at each step along the way. And then we'll give you a kind of a quick fly over of the [Solidigm] product portfolio, sort of our advocacy in terms of what Solidigm products work well for AI use cases.

So, a quick 30-second introduction to the company. We were born from an acquisition by the Korean company SK hynix of Intel's storage group, NSG non-volatile storage solutions group. We just turned two years old on December 30th [2023]. And so, a new name but a long legacy of expertise, a huge IP portfolio, and a very broad product portfolio.

Our parent company is in South Korea; we are headquartered in the Sacramento area. And this is us, the two of us you'll hear from today. My name is Ace. I have been with the company since its inception [in December 2021] and going back about another 5 ½ years with Intel prior to that, mostly in the storage group.

Today I work on market development. So I explore applications and emerging use cases upon which we can apply our existing product portfolio. Alan [Bumgarner] is here as well as the domain expert, the AI brains behind the whole operation, and he'll talk you through some of the more technical aspects of the presentation and workload composition and analysis as we go.

[Let’s] start with a quick quiz. I wonder if anybody here knows this: what was the first artificial intelligence ever depicted in a feature film? Does anyone want to hazard a guess? You won't be as fulfilled if you get it right.

Audience member Karen Lopez: Metropolis.

Stryker: Exactly, exactly.

Audience member: How did you know that?

Stryker: Very good.

Audience member Karen Lopez: Because it's like one of the oldest films ever. I'm smart. I should be AI.

Stryker: I'm actually not super familiar with this film. Paul, who you'll hear from at the Supermicro [session] is an expert on this and can talk a lot more about it.

Audience member Karen Lopez: It’s beautiful.

Stryker: This was a German expressionist film from 1927, evidently. And this sort of evil robot was devised to impersonate a human, I think, and sort of violate workers rights and make the rich richer and make a mine unsafe to work. And I'm not sure of all the details, but wasn't a great start in feature film, we're hoping to do things much more constructive these days.

Okay, so what is [the] Solidigm view of what we're here to do. It turns out AI might be kind of a big deal. I don't think I'm saying anything controversial there. But as the use cases evolve, as the architectures evolve, as the data sets, particularly upon which these models are trained, get bigger and bigger—and we'll look at some data on trends there—the role of storage becomes more and more key to a few things: to efficient scaling, to operational efficiency.

So we'll take a look at how SSDs stack up against hard drives a little bit. We'll talk about TCO and efficiencies in terms of space and cooling and power that SSDs bring to bear within an AI server as well.

And so [the] Solidigm position is we have a broad portfolio of products today that map quite well onto some of these use cases depending on the particular goals and strategies of the folks training and deploying the models. And you can expect to hear from more from us in the coming months and years as we get more and more into this, and look more and more toward future opportunities, and technological innovations.

A quick word about our partners. One of the best parts of this job is working with the best partners on the planet. We have Supermicro here, and you'll hear from them in a little bit, but there are a great many that we're privileged to work with across the industry that are using our SSDs in all sorts of interesting ways to accelerate AI workloads, to optimize infrastructure, and to make sure that GPUs are being fed and utilization is being maximized.

This is just a few examples of some of the folks that we work with and some of the folks that we're sharing ideas with all the time as the strategy evolves here.

Okay, so we'll start kind of broad here, then we'll move quickly and narrow down on storage. AI chip spending is exploding. This isn't a surprise to anybody, right? What's coming along with that is the decentralization of where the compute and storage is located. So we're moving from a world where a lot of this stuff was by necessity housed in the core data center, to where more and more of the work can now move to the edge.

You can do a lot more intense work at the edge in terms of real time inference and reinforcement learning. And so distributed architectures are becoming more and more important as folks design and deploy these solutions. And we see a lot of growth at the edge. Growth at the edge is outpacing core growth in the near end to midterm.

Hard drives are a big deal still in this realm, as they are in data centers generally. And we'll talk about that and we'll talk about what the comparative view is there. But our own market analysis, our conversations with customers, has taught us that 80% to 90% of the data that's out there, that's being prepared and fed to models for training, lives on hard drives. And so we see a tremendous opportunity there to accelerate that transition to flash memory and get this stuff done more efficiently, more quickly.

This is another view with some data. This is from a great kind of repository called Our World in Data that's run by Oxford University. And it talks about some of the models and so forth that I'm sure you're very familiar with. You can use sort of the general trend line here gives you a view, and we'll look at a specific example, of the evolution of GPT through the generations on the next slide.

But the models are only getting bigger, more sophisticated, more parameters. Bigger raw data sets [are] being tokenized and fed in to make these things better and better at generating insights and interacting with humans. And the growth in the compute needed to continue to develop that is commensurate.

Audience member Ray Lucchesi: You mentioned on the prior slide that storage content for AI [is] servers going up, I don't know, 2 ½ to 5x or more. Are you talking about. A 3x, I'm sorry. Is that the cost of storage in those servers or is that, I mean, I didn't see anything, that told me what the actual numbers.

Stryker: That's a comment on the density of the storage with the space.

Audience member Ray Lucchesi: So the space?

Audience member Karen Lopez: Larger is how I interpreted it.

Stryker: More and more data.

Audience member Ray Lucchesi: Yeah, I'm trying to understand if it's a cost component or is it a space component in a server?

Stryker: I mean, it has it has implications for all of the above, right?

Audience member Roger Corell: It's specifically capacity, yeah.

Audience member Ray Lucchesi: Oh, so it's a capacity number. Okay.

Alan Bumgarner: In general, for an AI server, for a training environment, you're feeding it much more data than you are for most even database transactional server.

Audience member Ray Lucchesi: I understand that completely.

Bumgarner: Pretty simple one, yeah. It's a good question though. Thank you.

Stryker: Good question. Thanks.

Okay. We're also seeing, as the models become more sophisticated, the applications diversify and grow as well. Right? So there isn’t an area of personal life that isn't already touched by AI and that will continue to be the case. As Solidigm, we see opportunities to attack a few of these first, where we think we can accelerate the workflow, but certainly across all of these, there are storage demands. And we'll get into those as we go through the presentation with you.

So high level, why storage matters to AI in 1 slide. Here we are. So the data sets are growing logarithmically. The chart on the top left is the count of training parameters in the GPT models, starting with Gen 1 back in 2018.

And we're now, you know, GPT 3 was billions of parameters, GPT 4 is trillions of parameters. So this thing is just blowing up, right? The more data that you can pull in and prepare and expose your model to, the smarter it's going to be. The bottom left gives you an idea of some of the source material.

So if you're doing an LLM, a large language model, a lot of those are built on the Common Crawl corpus, which is a web scrape every three or four months. Today, you know, they've been doing it since 2008. Today, the total body of text there, I think, is 13 or 15 petabytes and only rising, right? So more and more data being fed into these models.

The second reason storage matters to AI has to do with optimizing your spend, optimizing your power budget, optimizing your rack and data center footprint, right? So we know that a lot of that spend is going to GPUs but those GPUs need a high performance storage to be efficiently fed to do their work and remain at high utilization throughout the process. Particularly at certain points in the process, which we'll talk about, like checkpointing, which is a key part during training that's very, very storage dependent and essentially leaves GPUs idle as long as that's occurring.

So that's one area where we see a great place for high performance storage. Power I mentioned as well. This data here and the center of the bottom column comes from a white paper published jointly by Meta and Stanford University and their particular use case that they were explaining in the white paper.

Storage consumed as much as 35% of the power footprint of the whole server. So big, big implication. If you can do that more efficiently with higher density storage with other optimizations you can potentially save a lot of power and consequently a lot of money. And then finally, the distribution piece we talked about where we're going from this all-in-one paradigm where the whole workflow is happening end to end in one location.

So where it's happening depending on the specifics of the use case could be happening in many different locations. And I want to stress as you look at this chart and as you see some of the other things that we're going to show you: AI is not one thing. It's not monolithic. And the details will, of course, vary from one deployment to another from one application to another.

Audience member: So can you clarify what near edge versus far edge is?

Stryker: Yeah, absolutely. So, core data center we're familiar with, right? Far edge is the stuff that's out there. It might be in a truck. It might be ruggedized. It might be endpoints that folks are interacting with. Near edge may be several miles from the far edge. It may be a regional data center, sort of an in between place.

Bumgarner: Kind of like a Colo. A colo facility1 is near edge and then a far edge could be on a telephone pole. It could be in a machine, in a factory, automobile could be a far edge. Things like that.

Audience member: Like IOT devices?

Audience member: In fact, I was going to ask that question.

Bumgarner: Kind of like an IOT. Right.

Stryker: Yeah, absolutely.

Audience member Ray Lucchesi: You're saying that inferencing is happening at –reinferencing, is that what that's saying?

Stryker: The light blue bar there is inferencing. Yeah, yeah.

Audience member Ray Lucchesi: Inferencing, I understand. Data preparation and ingest is happening at IOT devices. And inferencing is happening at IOT devices. What's the—is it reinforcement learning?

Stryker: Reinforcement learning. So lightweight training that's occurring that doesn't necessarily need to be fed back to the core data center in order to refine the model.

Bumgarner: And I would add we have a lot of customers that we work with who don't want to ship data back. They have places where they have servers that they don't have satellite connectivity all the time or cell phones. So there's a lot of, “I want to be able to do this on the edge.” And then every now and then I'll take a snapshot of that data, take it back and retrain it.

Audience member Donnie Berkholz: Could you share like a couple of real examples of what those places might be?

Bumgarner: Yeah, there's a lot of telephone poles that have little boxes on them. And you'll see from our partners over here in just a little bit, they actually sell one of those servers that sits on telephone pole. Power substations [or] any kind of machine factory that's off of the grid or maybe out in the wilderness.

There's a whole bunch of machine tools and robotic things that can happen that you want to be able to analyze data from those things to look for trends or failures or different kinds of patterns that you want to find. It's almost unlimited, though.

Audience member Donnie Berkholz: I'm just curious what your customers have versus like the theoretical possibilities.

Bumgarner: The theoretical possibilities are kind of endless right now, especially for deep neural network models.

Stryker: Great questions. Any other questions on this one? All right, rolling along. So I want to talk a little bit about the view of hard drives versus solid state drives. This, of course, is highly oversimplified, but it directionally gives you an idea of where the real strengths lie in flash media.

Your rows in this table represents stages of the AI workflow, which we’ll sort of dissect and characterize in greater detail for you in a bit. But, you know relative to each one of those, there's sort of a key I/O parameter, one of the four corners performance numbers, if you will, that we've identified that is particularly important for walking data through that stage of the workflow.

You can kind of see there where a typical 24TB hard drive lines up and where one of our products, the [Solidigm] D5-P5430, lines up as well. And so clearly some great gains to be had throughout the pipeline. And this view gets even more intensified when a couple of things happen.

So one of them is, we see a lot of over provisioning of hard drives out there in the wild to meet minimum IOPS requirements. And so that has a direct impact on your TCO calculation, because now you're having to buy X percent hard drives more than you need for you to meet your capacity demands in order to account for over provisioning.

And the other thing is, we see within an architecture within a server one system resource. For example, the storage device isn't necessarily just doing one thing at a time. It's not just walking through a single pipeline where your SSD is doing ingest, and then it's involved in prep, and then it's involved in training.

But in fact, it may be hit by multiple pipelines at the same time, and your source device might be involved in a training task at the same time it's involved in a checkpointing task or a preparation task for a pipeline that's happening in parallel. And so this generates more of a mixed I/O workload, and I think you'll hear a little bit more about that from our friends at Supermicro as well.

But that's an area, of course, where SSDs shine when you see that kind of mixed traffic from concurrency or multi tenancy hitting the drives with multiple stages of this pipeline at once.

All right, we mentioned TCO a couple of times, so I want to spend a minute here because there's a lot that goes into these calculations. There's a whole TCO calculator we've developed. SNIA has one as well that I think is very similar in some ways, but it takes into account all sorts of different factors when you get to the purple bar down at the bottom.

But in this case, if we're solving for an AI data pipeline, that's a 10 petabyte solution. You can accomplish that with all hard drives. You can also accomplish that with for example, our [D5-]P5336. Which is our really high density QLC SSD available in capacities up to 61TB today. So storing that amount of data across 24TB terabyte hard drives, assuming a 70% utilization (so we are assuming some over provisioning is occurring there on the hard drive as well and we believe that to be a conservative number. We've heard from partners and from others that 50% may be closer to what's happening in the real world). But to give hard drives the benefit of the doubt, we're assuming 70%.

Even in that case, you're talking about 5x fewer drives. That density advantage drives a lot. So not only are these drives storing quite a bit more data. They are also physically smaller. You're going from a 3.5-inch form factor down to the U.2, and we have some other form factors available for various products as well.

But that has a direct impact on how many servers do you need to populate the storage you require? How many racks are needed to house those servers? So you can think of it almost as a cascading effect where fewer smaller drives, physically smaller drives with higher densities, leads to fewer servers, fewer racks, smaller data center footprint and power optimizations as well.

Because even though on a per unit basis, you can look at one drive versus another and make a judgment, in the aggregate is where that really matters. In the aggregate, you can look at something like your effective terabytes per watt there and say, hey, the power density I'm getting, because these drives are so high capacity, is a huge cost saver and a huge part of the TCO story.

And directly tied to the TCO benefits are kind of those green icons that you see in the right column there. So those are sustainability implications. We're not just talking about saving money, right? We're also talking about doing this in a greener way.

And so 5x fewer drives is a lot less hardware to dispose of at end of life, not to mention the servers and the racks as well that you no longer need that are part of the EOL story.

Audience member Donnie Berkholz: When I'm looking at that, I'm struggling to figure out what the biggest cost drivers are because there's only dollar signs in one of those. Is it the drives or the number of servers?

Stryker: The density is the biggest driver of the TCO here, by far. I think we have the calculator on our website. Do we not Roger? Yeah. So you can go in and turn the knobs and there's an awful lot of them. But in terms of what's really driving TCO it's the fact that innovations and flash have given us 61TB drives and so everything kind of flows from that. And so you're saving on your server footprint, your data center footprint, you're saving on your power envelope as well. So all of these things feed into a great cost savings over the life of the hardware. Any questions on that one?

Cool. All right. And then finally additional advantages for solid state drives,generally, in workloads, we talked about density a lot so I won't belabor that point. XPU utilization; we'll talk about this a little more in depth. Alan will get into the workload characterization, but there are phases of the workflow, particularly during training, where you want your GPS to be just as busy as they possibly can be.

You've spent a lot of money on that hardware. You do not want it to sit idle because it's being starved for data or because it's waiting for your storage devices to write a checkpoint. So these are all areas where high performance storage can help maximize the value and the utility of the hardware that you've bought on the compute side.

And then check pointing is, as I mentioned, a key part of that as well, as the restore piece, which is related and we'll talk about both. Okay. So that's it from me. I'm gonna hand it over to Alan to walk you through some of the workload characterization pieces.

Alan Bumgarner: Hey guys, I’m Alan Baumgartner. I'm the director of strategic planning. I do a lot of SOC memory kind of far in the future stuff. I also do a lot of workload characterization things. So, back to what Ace was saying a little earlier, does anybody remember how many hard disk drives I need to equal the performance of one Gen 4 PCIe SSD?

Audience member Ben Young: 10 to 23.

Bumgarner: 10 to 23. Right. And so that gives you that nine racks of hard disk drives versus my single rack of SSDs, right. Granted, one cost a little more than the other. I think we all know the answer that one. But from a TCO perspective when you're running a great big graphics cluster has anybody heard of the investment that Microsoft made in a fusion startup for a reactor so they could power their new graphics cluster?

Audience members: laugher

So the number one thing that most of these folks are thinking about is power. And if you kind of do the math and you do the real estate thing, and you're thinking about, okay, I have so many cabinets of hard disk drives that are eating so much power, and I have all these new graphics cards that I'm bringing into my data center, and I need to power those.

It's kind of a natural progression from one to the other, right? So you have to find an area, and then more than that, you have to find power that you can route to those things, because they are fairly power hungry. Anybody know how much power an H100 eats?

Audience member: 250 watts.

Audience member Ben Young: More than that, I think.

Bumgarner: You're getting closer.

Audience member: 1600.

Bumgarner: Almost.

Audience members: laugher

Bumgarner: All right. I'll talk through some of that fun stuff as we go through. So there's kind of five stages, and this is really modeled around kind of a generative AI or a deep learning recommendation model. And these are big models that companies here in the Bay Area use all the time for a bunch of different things. So you have a kind of five stages for a training here for the training piece. So you have to ingest all of this massive amounts of data, right?

And you have to take that data. And once you get it the data is usually dirty. So there's a whole lot of talk around new AI models that probably aren't going to be popping up all over the place because really the trick here is to have a finely curated data set that you've cleaned and scrubbed and made sure that it's very accurate before you feed it into your graphics cluster.

And so if you recall, Ace showed a little graph that showed how much power gets used by Meta before they actually run it through their graphics cluster and storage. That's this front phase, right? That's the data ingest. You take all these things, you have to turn into columns and rows, and then you have to feed it through and start to vectorize it as you're running it through.

So there's a whole lot of work that happens up front from a storage perspective and a database perspective, before you even get to the fun training part. Where you're starting to tokenize everything. And if you look at the I/O when you're ingesting data, whether for a ChatGPT, you're taking an Internet scrape, which is, what,12 or 13 petabytes these days versus somebody like Meta who takes overnight all the information they get from cell phones and PCs and folks using their application.

I like to tell this joke that I talk to my wife's phone all the time, I'm like, “Hey, 911s are really like very reliable, great family cars.” And then the next day she sees an advertisement for one. She's like, “Why am I getting Porsche advertised to me?”

So there's a lot of stuff that happens as this data comes in. It's not just text data, it's pictures, it's voice. It's all of these multimodal things that happen. So then you’ve got to take this and figure out how to train it. And so you've got a model that you have a whole huge graphics cluster that you run all of this data through, and you go from writes to a lot of read-write while you're vector colonizing and realizing the data. (I guess those probably aren't words.) And then as you pull it in, you're doing mostly reads through your training cluster. And then you finally get all done, and you have this nice training model.

And now you have to inference it. So who used ChatGPT while that was speaking Spanglish last night? Did anybody get that?

Audience member: I just heard about it .

Bumgarner: I just read about it too. I didn't actually use it. But, yeah, that's inferencing, right? When you're asking it a question. Inferencing is also when somebody like Meta wants to figure out how to advertise something to you, because they've seen it's something that you were associated with.

So you think about, we talked about this a little earlier and there was a question on inferencing and where that happens. And I think it was from over here. The great question when you're inferencing for a generative AI model, you're asking it a question, you're expecting an answer in language. When you're inferencing for predictive failure on a machine tool inside of a factory, you're probably doing it right there at the factory with the train model, and you're looking for patterns of the tool is about to fail; things like vibrations, extra things that are happening to some of the power or voltage that are happening on that tool.

So inferencing can happen from the core data center, which is what we're doing when we're typing on a keyboard to one of the AI models, to a lot of stuff that could be coming in the pipeline for applications for AI. And if you kind of scale that up there's a lot of things that you can do in a cloud when you have that kind of Internet link and great big data pipes. And there's a lot of other fun stuff that you can do at the near edge and at the far edge when you don't have those data pipes.

Any questions? I think everybody kind of gets this piece.

So as you as you break through and you think about different kinds of AI models and workflows, there's the ingest again, which is a constant read or a constant write to a solid state drive. If you're thinking about it from my perspective that's not too high performance. So it's something that you can handle with something like a QLC drive that doesn't have super huge write performance, but has massive read performance.

When you're doing your checkpointing or your training or your preparation, there's a lot of read and write. So you're doing what we call a very mixed four corners workload, and so you're hitting random reads. Most of it is random, by the way, random reads, random writes. But then you start as you kind of close in on getting good, clean data, it starts to become more sequential as you're working through it. So it kind of lends to what we'd call a TLC or a three level cell drive.

And then, as you're going into a training piece you're doing 100% reads, so you're sucking all that data in, you're running it through your graphics cluster, you're doing massive amounts of single and double precision floating point compute on it, so you can calculate. Anybody know what the stochastic gradient descent is?

I just had this conversation with my son who's taking Calculus 2. He asked me, “What does this mean?” I was like, “Well, that's the, that's finding the line, the optimal line down the mountain when you could take any path down the mountain, so you can figure out the gradient descent.”

And then you get to the inferencing piece. And the inferencing piece is when you're trying to use this data or this model that you've produced to find something fun. Here's a great one from the Porsche 911 example earlier. Does anybody know how many deep learning recommendation clusters there are that Meta has worldwide?

Audience member: How many clusters or how many…?

Bumgarner: How many clusters?

Audience member: More than three?

Bumgarner: 19 I think was the last count globally. So if you think about that, and you know how many GPUs?

Audience member: 350,000.

Bumgarner: Yeah. So divide that by 19 and you can think about how many graphics engines you have to feed and how much data they're ingesting globally to do this all the time. And they're not the only ones, right? There's a Google bunch of clusters that do this. Amazon has the same thing going on all the time.

And this has been happening for years. One of the things that we found out when we were reading this paper that was really interesting was if you look at that little power thing on the lower left, it doesn't specifically state it, but I'm guessing most of that was hard disk drive. And so as people are learning that those GPUs that they have to power and all those clusters eat more power, there's less and less real estate for those things to happen.

So we think it's a boon for at least the solid state drive business for the foreseeable future.

Stryker: If we've done our job, we've convinced you that storage has a key role to play within an AI server in terms of accelerating the workflow and in terms of seeing it through end to end with efficiency, right?

So the next question becomes, well, what do you recommend for these kinds of workloads? And the answer, like the answer to most good questions, is it depends. So what we have here is some advocacy based around our current product portfolio depending on sort of the goals of the folks developing and deploying the model.

I'll walk you through them kind of row by row, but starting at the top in the dark purple, this is sort of the max performance solution. And this is a combination of technologies. So this one's a little more [complicated], it takes it takes a little more explaining than the others, which are single drive recommendations.

But in this case, we have a software layer called CSAL, or the cloud storage acceleration layer. What that enables us to do is to take incoming writes and to direct them intelligently to one device or another. And it appears to the system as one device, but under the hood, CSAL is essentially directing traffic.

Audience member: And what's the device? Are you talking NAND chips? Are you talking SSDs?

Stryker: I'm sorry, can you repeat?

Bumgarner: The question was: Are you talking NAND chips or SSDs? So to make it really simple, the cache buffer, the [P]5810 is, if you remember, the very first SSDs were single level cell SLC. And so they're very, very fast read-write. And so that's kind of sitting in front as a buffer behind the QLC or the quad level cell.

Audience member: It's the CSAL is the one I'm trying to understand.

Bumgarner: Yeah, it's the cloud. So that's a, caching software layer. That allows you to write almost all of your…

Audience member: That operates on a host or operates on a drive?

Bumgarner: That operates on a host. So you have a host software that's running that has two sets of drives inside of the server. Your SLC drive’s write buffering in front of your QLC drives, which have a little lower write performance.

Audience member: Different drives, one SLC and one QLC.

Bumgarner: That's right.

Stryker: Yeah, great question. Thank you.

Bumgarner: Which is why it depends.

Stryker: So, that's what makes this one sort of the best of all worlds. Because you can get huge capacities by virtue of the QLC component. You can also get face melting write performance because we have the SLC drive. And CSAL will take care of using those ingredients optimally throughout the workflow.

Audience member: So, talk to us about the importance of this as we look at the different phases, because not all environments are going to be in just prep, train, checkpoint, inference. Obviously, these are going to be, in a lot of cases, different systems. So, let's take prep, for example, where the CSAL help in prep. We had an earlier presentation where they talked about, it took them two weeks to get to the data they wanted to train on before they actually sent the jobs to the GPUs. So it's super critical that we send the right data into the system. So where does this come into play in helping me optimize for prep?

Bumgarner: Yeah, actually, it's a great question and we bounce back and forth on that. But I think the easiest way to think about it is depending on the amount of data that you're ingesting. If you have the luxury of being able to write that slower because it's coming over a time series, then something like CSAL could be really, really beneficial because you can write a lot of data and you can squeeze it in there pretty good.

When you're pulling that data out of the QLC drive, the way we kind of see it is if you're doing an extract transform load where you're column and rowing, that's when you want the performance of a TLC drive, the three-level cell. Because you're getting pretty much your optimal read-write combo from that one.

And so as you're starting to index the data and you're starting to make sense out of it and clean it and get rid of duplicates and all of the fun stuff, which is the two-week journey that they have to go through, that's kind of the balanced performance right in the middle.

So that's really the first row you can use for ingest and you could use for training, but as you're doing the extract transform load, we kind of see that as a triple level cell or a TLC style drive.

Audience member: Well, I'm sure Supermicro will go into a portfolio how they package this and talk to customers about the specific use cases across the portfolio and how they recommend teaming the overall distributed system for each one of these phases, hopefully.

Bumgarner: And I think some of it is actually pretty new data since we're really focusing on AI as a workload now, or as an application. But, yeah, you'll see a lot of stuff from those two gentlemen over there on their portfolio and how flexible it is. That’ll allows you to accommodate for all of the different types of use cases.

Stryker: Great discussion. Thank you. Okay. The 2nd row there, and sort of the medium purple, if you will, is the balanced recommendation here. So good read and write performance, lower costs than the solution that we're advocating with the SLC buffer. And the reason you see 2 drives in that row is because the [P]5520 is a TLC drive. The [P]5430 is a QLC drive. Based on the workloads we've analyzed and what we've understood from customers and partners about sizes of the data sets and so forth, we believe either to be a great solution for folks who want to prioritize that and get a great high-performance solution at a lower cost.

The coloring of the bars there gives you a sense of kind of relative capabilities between the 2. So the [P]5520, the TLC drive, is the orange dots there, and the QLC is the white dots. But in either case very strong performance and, we believe, a great fit for a lot of AI workloads.

Audience member: Which ones for this compared to the last example? How would you see that being different workloads or different requirements?[CW10]

Stryker: Compared to the dark purple row?

Audience member: Yeah.

Stryker: So it'll depend on a lot of things. It'll depend on what's the size of the raw data set. It'll depend on how many GPUs are you running the data through. So it's difficult to map one row onto a class of use cases without understanding customer requirements. This is really intended as a starting point for that conversation and to say…

Bumgarner: Maybe I can give you an example from the two that we've been talking about. So when you're doing an Internet scrape, and you're importing all of that data in for indexing or an ETL function, that's probably going to overload, because SLC drives—remember, those were pretty small when we first made them in the three-level cell. You can store three bits per cell instead of a single bit per cell.

So you have 3x capacity with the same amount of die. So as you think through what happens with drives as you get bigger and bigger and bigger or more bits per cell as your read performance stays about the same, but your write performance starts to go down.

So if you're thinking of it from I'm importing an internet scrape, that's a huge amount of right really, really quickly, so you're probably going to need something like a TLC drive or something with a balanced performance. But if you're ingesting data over a 24 hour period from a smooth data feed, you could probably buffer that data and write it to a larger capacity drive. So it really depends on the ingest style.

Audience member: If it's this complicated, does that mean every single sale is a consultative deal? Like, how do customers understand the complexities well enough to buy the right thing?

Bumgarner: Yeah, and I think it's, gonna be like the original days of the, if you remember, email databases right back in the day everyone was a consultative sale because it depends how much email your particular organization used to send and how much performance you need from your disc to do that.

And AI is kind of the same thing. It really depends on how you're using the model and what type of data you're going to train the model with. So it's a much more consultative [process] than it normally would be. You really have to understand the pipeline and how much data is flowing through that pipeline.

Audience member: Another interesting line on here is this five-year TCO, which is a curious number. When we were dealing with in-memory databases a few years ago, the introduction of this a few years ago, we would recommend that customers don't depreciate these servers more than two years. AI is even more accelerated from the piece of like how quickly these servers useful life is or the use case that they were purchased for.

So talk to me about like the life cycle. Management strategy of disk versus physical distributed system. Are you guys looking at that TCO as in: You know what; this technology moves slower. Therefore, there's a longer depreciation cycle, or is it you just picked 5 years because 5 years has traditionally been a number.

Bumgarner: Actually, it's simpler than that. 5 years is how much long we warranty a drive. And that's based on how many drive writes per day you can achieve. And a lot of that has to do with the capacity of the drive. And the interesting thing that's been happening as you go from a lot of people using M.2 2TB and 4TB drives today in their data centers. And we're shipping 30TB and 60TB plus drives.

So now your drive rights per day starts to go through—or a better way to say it is terabytes written—can go through the roof. So your 5 year TCO, I think, is going to change over time. It might go to 7 [year TCO]. There might be some other ways to think about it. But for the purposes of this calculation right here, this is really talking about power, floor space, all of the other things.

So it kind of goes back to that; I need nine racks of hard disk drives to do what one rack of SSDs could do on a Gen 4. At Gen 5, that widens from a performance perspective. And then Gen 6 and Gen 7, which we can take advantage of, will continue to widen that gap. So there's a lot of factors in that, but the 5 year kind of starts from how long we would warranty an SSD.

Audience member: You know, historically SSDs have had a challenge with sequential writes and garbage collections and things of that nature. As you start getting more and more full, garbage collection becomes more and more of a concern to some extent. When you're doing sequential rights at the speeds that we're talking about, I can't recall what the numbers are, but hundreds of…

Bumgarner: Random makes it worse. The random writing…

Audience member: Yeah. You’re talking serious numbers here. You sustain that sort of stuff on a drive that's been used for quite a period of time?

Bumgarner: Yeah. We actually have a lot of customers that go past the five year [mark]. And there's also host software that's in development. Have you ever heard of flexible data placement?

Audience member: Yes.

Bumgarner: So there's, my colleague, John, worked on that with a couple of folks from Google and Meta. And that will allow you to sequentially write almost all of the data. That's coming in and stripe it across the die so you can get an even level of performance.

So it's what you've seen is all of that stuff done in the firmware and the flash translation layer and the controller. And some of it is starting to break out onto the host. So the host can do those rights more smart or on a smarter, more intelligent way. And so there's things that are happening to fix some of those original garbage collection issues. Great question and flexible data placement, I think, it's kind of the…

Audience member: That's why you have SLC in this and this other solution to some extent to try to manage the write activity.

Bumgarner: Yeah. That's for speed.

Audience member: Now that I'm having a better understanding of the use case for CSAL and how it's presented to the system, talk to me a little bit about the software. I'm leery. Anytime I hear there's another piece of software I have to manage out, especially in the edge. How do I? How frequently is that updated? And how do I do life cycle management of CSAL for deployment in the edge? ,

Bumgarner: It's open source or it's going to be open source pretty quickly. I can't remember if we've fixed that yet. And so there's a whole community that…

Audience member: Alan, it's on GitHub right now.

Bumgarner: It is on GitHub right now?

Audience member: It is on GitHub.

Bumgarner: Okay, thank you. So from a maintenance perspective, you'll see contributions and things from outside. Because one of the reasons we had to do that is a lot of people wanted to be able to touch it themselves and add to it. So it's kind of taken on a life of its own.

Audience member: Is it something you expect to be in a Linux kernel at some point?

Bumgarner: No. No, I would never expect something like that to be in a Linux kernel. It's more of a caching layer just to allow you to, not only to send the writes to the correct place, but to shape the writes.

So you're making it more sequential when you get it to the QLC drive, kind of like what flexible data placement will allow you to do.

Audience member : Yeah. It's pretty consistent with all my other package management and update. So it's just if I'm pulling it from down from GitHub, whatever I'm doing to upgrade, update system components, drivers, et cetera, I can use that same process. It's not a proprietary process to update software.

Bumgarner: Yup. Pretty standard.

Stryker: Great questions. Thank you. And to bring us home on this slide the bottom row there, this is where the spotlight's really on our super high capacity solutions. So that’s the P5336, as I mentioned earlier, that’s available in capacities up to 61TB. It's a QLC drive. And so what you're gonna get out of that is great read-optimized performance.

The write speeds will be somewhat less than a TLC. And so, as Alan mentioned, use case [is] where you have a sort of a steady data stream or another setup where you don't have a great need to ingest a whole bunch of data as quickly as possible. But you have a whole bunch of data. It's a great…

Audience member Ray Lucchesi: As I understand this chart: you've got probability 99, random write latency is the best. But the random read, probability 99 random read latency is sort of middling. This is NVMe SSDs, SLC, we're talking top-end solution here. What does that mean? Is that in comparison to disk? Is it in comparison to other SSDs?

Stryker: These are relative to one another on the slide. So what we're trying to show here is the relative strengths within our own portfolio. But what you can expect…

Audience member Ray Lucchesi: So the random read latency, the probability 99 random read latency for this solution, the top one is roughly half of what the P5520 is. Is that what I'm reading?

Bumgarner: You're reading it right, and that's because you can write it into a SLC drive before it flushes to…

Audience member Ray Lucchesi: Random read. Probability 99.

Bumgarner: Did you say write or read?

Audience member Ray Lucchesi: Read.

Bumgarner: Okay.

Audience member Ray Lucchesi: That's what the chart says.

Bumgarner: That one is probably a function of the QLC being buffered through the software, but I need to go look at that one because I think you might have caught a[n error.]

Audience Ray Lucchesi: So it's a QLC drive on the bottom and it's effectively an SLC drive on the top. And the random read is worse.

Bumgarner: I think the easy way to think about it is a QLC can saturate reads. All day long. I mean, you have so many die, bandwidth wise, right?

Audience member Ray Lucchesi: Is a random read IOPS…

Bumgarner: Even high queue-depth bandwidth wise. Yeah, but random, right? Because you have…

Audience member Ray Lucchesi: I’m not concerned about random write. I’m concerned about random read.

Bumgarner: Really, any of them will meet whatever goals you have on random read. That's an easy way to think about flash. Reading is much easier than writing. And as you go up in capacity, writing becomes just a little more difficult because of the physics of putting the bits in the die.

Audience member Ray Lucchesi: Yeah, I understand the write. I just don't understand the read latency.

Bumgarner: Yeah, I think you might have caught a foil issue there, but thank you.

Audience member: On the top one?

Bumgarner: Yeah, the top.

Audience member Ray Lucchesi: Well, I mean the top one, compared to the middle one.

Stryker: Great feedback. Thank you. Okay, just one or two more comments from us, and then we will give you over to Supermicro to hear from them.

So this is a customer example here. This is folks that we worked with in China at Kingsoft. They have an object storage solution that in the previous generation was hard drive-based with a couple of SSDs on top. But they've moved to an all in the new solution, which they're calling KS3 Extreme Speed. [Read more about this solutions in our Kingsoft customer story here.]

And this is data from one of their customers that loaded in a 40TB raw data set preparatory to taking it through cleaning and exposure to the model. And the duration there was reduced from about almost 6 hours to 11 minutes by virtue of the hardware improvement there.

Bumgarner: I actually do have the answer to your question that I've thought it through. The P99 random rate latency is coming from QLC. So it's going to be the same either one, depending on how you're looking at it, because you're not doing reads from your SLC. Your SLC is there just for a flush buffer. So you're writing to your SLC, you're flushing the QLC, but you're always reading from QLC.

Audience member Ray Lucchesi: Alright, so the QLC drive on the middle box is actually better than the QLC drive on the top box. That's what you're saying. From a read latency, probably 99 perspective.

Bumgarner: It's actually the same across all three from a QLC perspective for P99 read latency.

Audience member Roger Corell: In all of these swim lanes, all the reads are coming from QLC or in the case of the middle lane where there's a 5520 option, it's coming from TLC and they will all have effectively the same high read performance. So thank you for pointing it out. It looks like we've got a little artifact to work here.

Audience member: Okay.

Stryker: Thank you, Roger.

Audience member Frederic Van Haren: You have an idea of your drive standing up in an object search solution versus the public solution.

Bumgarner: I would love to have that data, if you have it. I don't know any analysts that's got particularly that data. But I know there's a lot of people that make object store file systems that are really interested in being able to index better once it's already been written without having to pull the data out.

Audience member Frederic Van Haren: But you would say you would agree that the trend is more towards more and more object?

Bumgarner: From what I've seen, yes. And I think that like if you look at all of the IDC or Gartner or you know, big analyst reports, you can see the amount. And they actually, some of them have it broken out by industry like film data, warehouse, things like that. And there's always this little sliver of indexed data or what they call database data, even if it's a wide database. And then there's this massive 96%, 95% of unindexed object data.

Audience member: I think if you talk to AWS, you might have a different discussion.

Stryker: All right. Great. So here's our plug for some of our partners. We work with the best in the business and we're proud to do so. And we're proud to have collected some testimonials over time. I won't read any of these to you verbatim, but we have folks out there deploying these solutions today seeing the benefit in their real world workloads. And I expect you'll get some more detail from Supermicro on the particular ways in which that plays out for them.

So, to wrap up our portion of the presentation, it turns out—shocker—AMA might be kind of a big deal. Storage matters for many reasons. The data sets are getting bigger and bigger. The models are getting more and more sophisticated. And so storage needs to be able to scale efficiently with all of that in order to keep GPUs fed and keep utilization high, [to] make those AI servers as efficient as possible during every stage of the workflow. The cost piece is a significant component of the SSD story as well. For reasons we discussed in terms of power efficiencies, rack space, and data center footprint, and so forth.

Not all storage is equally up to the task. There's a prevailing notion among some that yesterday's technology is good enough for a while. But our position is that in order to really forge ahead here and enable new solutions, new levels of efficiency and output, SSDs are a required ingredient as part of the architecture.

And finally, the strength of [the] Solidigm product portfolio, the breadth of the offerings we have across TLC, QLC, SLC, and software is unique in the industry. [It] allows us to really target for specific customers, for specific applications, those storage devices or solutions that are going to help solve their unique challenges and accomplish their goals.

And we do all that with, and through our partners as well, including the great folks, Wendell, [Paul,] and others that you'll hear from at Supermicro. So we will take a break and give you a chance to hear from them about how some of this works in their systems.

For more, watch the Supermicro discussion here.

About the Speakers

Ace Stryker is the Director of Market Development at Solidigm where he focuses on emerging applications for the company’s portfolio of data center storage solutions. A Solidigm employee from day one, he previously worked as a solution architect and technical marketing engineer in Intel's storage and memory group.

Alan Bumgarner is Director of Strategic Planning for Storage Products in the Solidigm Data Center Products Group. He began his career at Intel Corporation in Folsom, CA more than 20 years ago. Since then his roles have included front line technical support, remote server management of multiple Intel data centers, product/channel/technical marketing, field sales, and strategic product planning and management. These roles took him from California to New Jersey, Texas, and Oregon, and finally back to Folsom, CA. Alan earned a Bachelor of Science in Business Administration Systems from the University of Phoenix. In his free time he enjoys cooking and also likes to ski, bike, hike, and work on cars.

Notes

[1] Colo facility is short for colocation facility or Co-location data center, which means any data center where multiple tenants can exist. It is a data center with server space, networking hardware, or storage devices for rent. For more information, see https://www.techtarget.com/searchdatacenter/definition/colocation-colo