The Role of Solidigm SSDs in AI Storage Advancement

As artificial intelligence rapidly advances to fuel humanity’s aspirations, computing power has had to grow as well. Clusters of thousands of GPUs are emerging everywhere, powered by high-throughput, low-latency networks and deep learning models. This evolving market prompts deep contemplation among AI architects. One of the most important questions is this: What kind of AI storage infrastructure can keep AI accelerators (GPUs, CPUs, and others) and network devices running at full capacity without idle time?

Phases of an AI project cycle

An analysis of industry practices reveals that the typical AI project cycle consists of five main phases:

- Data ingest

- Data preparation

- Model development (training)

- Model deployment (inference)

- Archive

To understand AI's storage requirements, it's essential to understand the nature of primary input/output (I/O) operations in each phase and to consider them collectively to form a comprehensive view.

Figure 1. Overview of a typical AI data pipeline

Phase 1: Data ingest

The pipeline kicks off with the first step of the process, in which the raw data is obtained that will be used to eventually train the model. Depending on the goals of the model, this data may take many forms. For an LLM, it could be a huge batch of public website data, such as the corpus of information maintained and updated by Common Crawl. For other applications, it might be LIDAR data from autonomous vehicles, medical imaging records, or audio recordings of whale calls. The nature of the raw data depends entirely on the developer’s goals.

This stage tends to induce large sequential write activity on the storage subsystem as the raw data is written to disk.

Phase 2: Data preparation

Before diving into training, it's crucial to thoroughly prepare the data that will be fed into the training cluster.

1. Data discovery, extraction, and preprocessing

The raw data used for AI modeling inherits the classic “3V” characteristics of traditional big data: volume, velocity, and variety. The sources of data can range from event logs, transaction records, and IoT inputs to CRM, ERP, social media, satellite imagery, economics, and stock transactions. It’s necessary to extract data from these diverse sources and integrate it into a temporary storage area within the data pipeline. This step is typically referred to as "extraction."

The data is then transformed into the suitable format for further analysis. In its original source systems, data is often chaotic, making it challenging to interpret. Part of the transformation objective is to enhance data quality. These include:

1. Cleaning up invalid data

2. Removing duplicates

3. Standardizing measurement units

4. Organizing data based on its type

During the transformation phase, data is also structured and reformatted to align with its specific business purposes.

2. Data exploration and data set split

Data analysts employ visualization and statistical techniques to describe the characteristics of a dataset, such as its scale, quantity, and accuracy. Through exploration, they identify and examine relationships between different variables, the structure of the dataset, the presence of anomalies, and the distribution of values. Data exploration allows analysts to delve deeper into the raw data.

Exploration aids in identifying glaring errors, gaining a better understanding of patterns within the data, detecting outliers or unusual events, and uncovering intriguing relationships between variables. Once data exploration is complete, the dataset is typically split into training and testing subsets. These subsets are used separately during model development for training and testing purposes.

3. Feature extraction, feature selection, and pattern mining

The success of an AI model hinges on whether the selected features can effectively represent the classification problem under study. For instance, consider an individual member of a choir: Features could include gender, height, skin color, and education level, or they could focus solely on vocal range.

Using vocal range as the feature, as opposed to the previous four dimensions, reduces the dimensionality to just one-fourth (meaning significantly less data), yet it might better encapsulate the relevant essence of the choir member.

To avoid the perils of high dimensionality and reduce computational complexity, the process of identifying the most effective features to lower feature dimensionality is known as feature selection. Within an array of features, uncovering their intrinsic relationships and logic, such as which ones are mutually exclusive or which ones coexist, is termed pattern mining.

4. Data transformation

For various reasons, a need might arise to transform data. It could be driven by a desire to align some data with others, facilitate compatibility, migrate portions of data to another system, establish connections with other datasets, or aggregate information within the data.

Common aspects of data transformation include converting types, altering semantics, adjusting value ranges, changing granularity, splitting tables or datasets, and transforming rows and columns, among others.

Thanks to the mature open-source project communities, we have a wealth of reliable tools at our disposal during the data ingest and preparation phase. These tools enable us to accomplish ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) tasks. Examples include:

Additionally, for tasks like creating numerous sets of features, we can rely on tools such as:

5. Storage characteristics for the data preparation phase

During the data preparation phase, the typical workflow involves reading data randomly and writing processed items sequentially. It's imperative for the storage infrastructure to provide low latency for small random reads while simultaneously delivering high sequential write throughput.

Phase 3: Model development and training

Once the preparation of the training dataset is complete, the next phase involves model development, training, and hyperparameter tuning. The choice of algorithm is determined by the characteristics of the use case, and the model is trained using the dataset.

1. AI frameworks

Model efficiency is assessed against a test dataset, adjusted as needed, and finally deployed. The AI framework is continually evolving, with popular frameworks including:

And more

This stage places extremely high demands on computational resources. Storage is crucial, as feeding data to these resources faster and more efficiently becomes a priority to eliminate resource idleness.

During model development, the dataset continuously expands, often with numerous data scientists requiring simultaneous access from different workstations. They dynamically augment entries among thousands of variations to prevent overfitting.

2. Storage capacity scalability and data sharing

While storage capacity starts to become crucial at this stage, the increasing number of concurrent data access operations means scalable performance is the true key to success. Data sharing between workstations and servers, along with rapid and seamless capacity expansion, are vital storage capabilities.

As training progresses the dataset's size multiplies, often reaching several petabytes. Each training job typically involves random reads, and the entire process comprises numerous concurrent jobs accessing the same dataset. Multiple jobs competing for data access intensify the overall random I/O workload.

The transition from model development to training necessitates storage that can scale without interruption to accommodate billions of data items. It also requires rapid, multi-host random access; specifically, high random read performance.

Training jobs often decompress input data, augment or perturb it, randomize input order, and require data item enumeration to query storage for lists of training data items, especially in the context of billions of parameters.

3. Checkpointing: Bursts of large sequential writes

The sheer scale of training leads to new demands. Today's training jobs may run for days, weeks, or even months. Consequently, most jobs write periodic checkpoints to recover quickly from a failure, minimizing the need to restart from scratch.

Therefore, the primary workload during training consists of random reads, occasionally punctuated by large sequential writes during checkpoints. Storage systems should be capable of sustaining the intensive random access required by concurrent training jobs, even during bursts of large sequential writes during checkpointing.

4. Validation and quantization

Once the model training is complete, a couple of key steps remain prior to deployment.

During validation, the developer tests the model using a separate data set (or portion of the original data withheld from training) to ensure that it’s behaving as expected and generating reasonable outputs. If not, it’s an indication that the training process should be revisited and improved.

The other key activity is model quantization, which includes reducing the precision of individual model parameters. Often the outcome of the training process is, for example, 16-bit parameters where 4 would suffice. By quantizing, the model becomes both smaller in size and faster during inference.

It’s worth noting quantization can occur at several points in model development. The two most common are post-training quantization (PTQ), which happens just prior to deployment, and quantization-aware training (QAT), an approach that involves downsizing parameters in real time during the training stage.

5. Summary of the model development phase

In summary, AI model development is a highly iterative process where successive experiments confirm or refute hypotheses. As models evolve, data scientists train them using sample datasets, often through tens of thousands of iterations.

For each iteration, they augment data items and slightly randomize them to prevent overfitting, creating models that are accurate for the training dataset but also adaptable to live data. As training advances, datasets grow, leading to a transition from data scientists' workstations to data center servers with greater computational and storage capabilities.

Hear from Solidigm partner VAST Data about the stages of the AI data pipeline and how they’re shaping storage requirements from start to finish

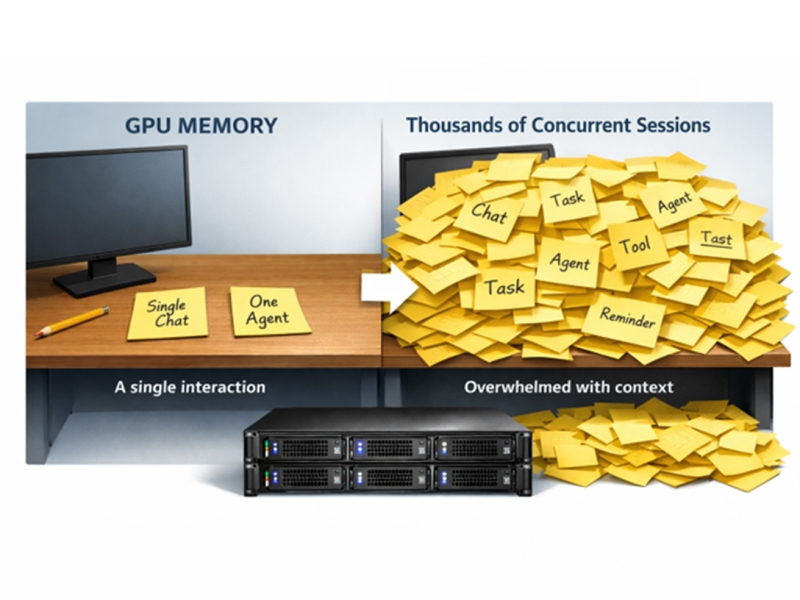

Phase 4: Model deployment and inference

Once model development is completed, it's time to deploy and go live with the service. In this inference phase, real-world data is fed into the model and, ideally, the output provides valuable insights. Often, the model undergoes ongoing fine-tuning. New real-world data imported into the model during the inference phase is incorporated into the retraining process, enhancing its performance.

1. Fine-tuning during real-world application

AI storage infrastructure must operate seamlessly around the clock throughout a project's lifecycle. It should possess self-healing capabilities to handle component failures and enable non-disruptive expansion and upgrades.

Data scientists' need for production data to fine-tune models and explore changing patterns and objectives underscores the importance of a unified platform—a single storage system that serves all project phases. Such a system allows development, training, and production to easily access dynamically evolving data.

2. Model readiness for production

When a model consistently produces accurate results, it's deployed into production. The focus then shifts from refining the model to maintaining a robust IT environment. Production can take various forms, whether interactive or batch oriented. Continuous use of new data is instrumental in refining models for enhanced accuracy, and data scientists regularly update training datasets while analyzing model outputs.

3. Retrieval-augmented generation

One development of increasing importance in generative AI models is retrieval-augmented generation (RAG), which provides a mechanism for the trained model to connect to additional knowledge sources during inferencing.

Consider an example LLM without RAG, trained 6 months ago on a public dataset that was current at the time. Several shortcomings become evident:

- The model cannot meaningfully answer prompts related to facts or events less than 6 months old.

- It cannot give responses specific to the user’s private data, such as email inbox contents or corporate SharePoint files.

- It has little insight into whether certain sources are more authoritative or useful to the user than others.

A developer could solve these problems by retraining the model frequently (expensive) or adding private data to the training set (potentially risky or impossible). Or they could use RAG to connect the pre-trained model to a public search engine, news sites, a corporate email server, etc.

When RAG is used, the LLM in our example receives an input from the user, routes that prompt to additional knowledge sources, and returns both the original input and the added context for inferencing, creating more useful responses.

Phase 5: Archive

Once your inference inputs and outputs have been fed back into the pipeline for fine-tuning of the model, it’s time for the final step in the process. As regulatory interest in the AI boom intensifies, data retention requirements are increasing. The need to store data from across the AI pipeline for compliance and audit purposes is driving higher storage write performance and capacity needs.

Table 1 summarizes each phase of the AI project cycle and its respective I/O characteristics and consequent storage requirements.

| AI Phase | I/O Characteristics | Storage Requirements | Impact |

|---|---|---|---|

| Data ingest | Large sequential writes | High sequential write throughput | Optimized storage means the ingest process can occur more quickly |

| Data preparation | Reading data randomly; writing preprocessed items sequentially | Low latency for small random reads; high sequential write throughput | Optimized storage means the pipeline can offer more data for training, which leads to more accurate models |

| Model development (training) | Random data reads | Scalability in multi-job performance and capacity; optimized random reads; high sequential write performance for checkpointing | Optimized storage improves utilization of expensive training resources (GPU, TPU, CPU) |

| Model deployment (inference) | Mixed random reads and writes | Self-healing capabilities to handle component failures; non-disruptive expansion and upgrades; same features as training stage if the model undergoes ongoing fine-tuning | Business requires high availability, serviceability, and reliability |

| Archive | Writes that can occur in batch (sequential) or real-time (random) fashion | High write performance, especially in generative AI models | Better data retention for compliance and audit purposes |

Table 1. AI project cycle with I/O characteristics and consequent storage requirements

Key storage characteristics for AI deployments

An AI project that starts with a single-chassis system during initial model development needs the flexibility to expand as data requirements grow during training and as more live data is accumulated in production. To achieve higher capacity, two key strategies are employed at the infrastructure level: increasing individual disk capacity and expanding the cluster size of storage enclosures.

1. Capacity

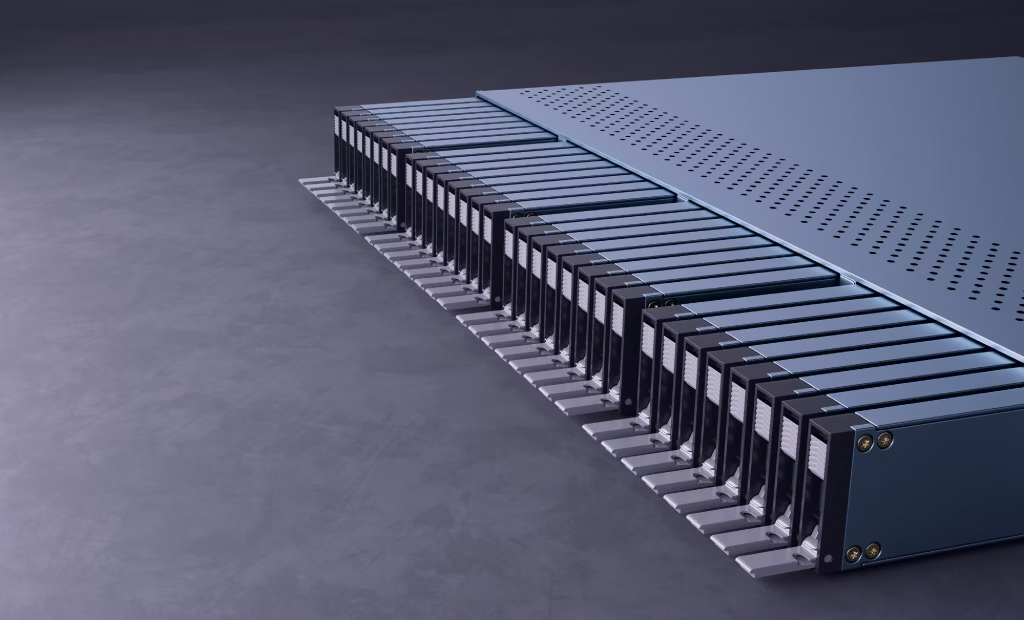

Enhancing the capacity of individual disks and improving the horizontal scalability of storage nodes are crucial factors. At the disk level, products like the Solidigm D5-P5336 QLC SSD are now reaching capacities of up to 61.44TB. At the storage enclosure level, EDSFF (Enterprise and Datacenter Standard Form Factor) showcases unmatched storage density.

For U.2 15mm form factor drives, a typical 2U enclosure accommodates 24 to 26 disks, resulting in a raw capacity of up to 1.44PB. Upgrading to E1.L 9.5mm form factor disks, as Figure 1 shows, a 1U enclosure supports 32 disks. With 2U, the storage density is roughly 2.6x higher than a 2U U.2 enclosure. A comparison is shown in Table 2.

| Form Factor | Number of 60TB drives in 2U rack space | Capacity per 2U rack space |

|---|---|---|

| Legacy U.2 15mm | 24 | 1.47PB |

| E1.L 9.5mm | 64 | 3.93PB |

Table 2. 2U rack unit capacity based on drive form factor

It's worth noting that higher storage density in a single enclosure translates to a significant reduction in rack space occupied by storage nodes, the number of network ports required, and the power, cooling, spare parts, and manpower needed to operate them.

![System designed for E1.L SSD form factor]](https://s7d9.scene7.com/is/image/Solidigm/fig-1-system-designs-for-e1-l-mobile?fmt=avif)

Figure 2. System designed for E1.L

2. Data-sharing capability

The data-sharing capability of storage is of paramount importance, given the collaborative parallel work of multiple teams mentioned earlier and the desire to train more data before delivery. This is reflected in the high IOPS, low latency, and bandwidth of the storage network. Additionally, support for multipath is essential so that network services can continue operating in the event of network component failures.

Over time, off-the-shelf networks have now consolidated to Ethernet and InfiniBand. InfiniBand has a rich data rate, leading bandwidth and latency performance, with RDMA native support. As a result, InfiniBand is a strong network to support AI storage. The most popular Ethernet bandwidths now are 25Gbps, 40Gbps, and 100Gbps. Recent AI deployments are leveraging 200Gbps and 400Gbps networks.

3. Adaptability to varying I/O

AI storage performance should be consistent for all types of I/O operations. Every file and object should be accessible in approximately the same amount of time ensuring that time-to-first-byte access remains consistent, whether it's for small 1KB item labels or large 50MB images.

4. Parallel network file operations

In AI projects, common tasks such as bulk copying, enumeration, and changing properties require efficient parallel network file operations. These operations significantly expedite AI model development. Originally developed by Sun Microsystems in 1984, NFS (Network File System) remains the most dominant network file system protocol to date. NFS over Remote Direct Memory Access (NFSoRDMA) is particularly suited for compute-intensive workloads that involve transferring large volumes of data. RDMA's data movement offload feature reduces unnecessary data copying, enhancing efficiency.

5. Summary of key AI storage characteristics

AI storage solutions should offer ample capacity, robust data sharing capabilities, consistent performance for varying I/O types, and support for parallel network file operations. These requirements ensure that AI projects can effectively manage their growing datasets and meet the performance demands of AI model development and deployment.

Conclusion

AI development continues to surpass our wildest expectations. In the urgency to feed computational behemoths with more data at a faster rate, there is no room for idle processing time and power. Solidigm offers drives in various form factors, densities, and price points to meet the needs of various AI deployments. High density QLC SSDs have been proven in performance, capacity, reliability, and cost.

![TLC-only solution transitions to SLC/TLC+QLC Legacy configuration rack with TLC SSDs to new configuration rack with SLC and TLC SSDs plus QLC SSDs.]](https://s7d9.scene7.com/is/image/Solidigm/fig-2-tlc-only-solution-transitions-to-slc-tlc-and-qlc?ts=1737073129873&dpr=off)

Figure 3. TLC-only solution transitions to SLC/TLC+QLC

Together with CSAL and the Solidigm D7-P5810 SLC SSD and D5-P5336 SSD customers can harness the ability to tune their deployments in performance, cost, and capacity.1 It is clear that with an innovative complete stack and open source storage solution, Solidigm SSDs have a unique advantage in bolstering AI storage advancement.

Figure 4. Write shaping cache with CSAL

About the Authors

Sarika Mehta is a Senior Storage Solutions Architect at Solidigm with over 15 years of storage experience throughout her career at Intel’s storage division and now at Solidigm. Her focus is to work closely with Solidigm customers and partners to optimize their storage solutions for cost and performance. She is responsible for tuning and optimizing Solidigm’s SSDs for various storage use cases in a variety of storage deployments ranging from direct-attached storage to tiered and non-tiered disaggregated storage solutions. She has diverse storage background in validation, performance benchmarking, pathfinding, technical marketing, and solutions architecture.

Yi Wang is a Field Application Engineer at Solidigm. Before joining Solidigm, he held technical roles with Intel, Cloudera, and NCR. He holds "Cisco Certified Network Professional," "Microsoft Certified Solutions Expert," and "Cloudera Data Platform Administrator" certifications.

Wayne Gao is a Principal Engineer and Solution Storage Architect at Solidigm. He has worked on Solidigm’s Cloud Storage Acceleration Layer (CSAL) from pathfinding to commercial release. Wayne has over 20 years of storage developer experience, has four U.S. patent filings/grants, and is a published EuroSys paper author.

Ace Stryker is the director of market development at Solidigm, where he focuses on emerging applications for the company’s portfolio of data center storage solutions.

Notes

[1] CSAL introduction: https://www.solidigm.com/products/technology/cloud-storage-acceleration-layer-write-shaping-csal.html