This Article Describes Performance

By Wayne Gao, Principal Engineer and Storage Solutions Architect at Solidigm.

Summary

At the Linux Kernel File System and Memory Management Summit, an intriguing topic was discussed: the Linux kernel's limitation at 7GB/s when writing using buffered I/O.

The root cause identified is the reliance on a single kworker thread to handle buffer I/O tasks. With the increasing adoption of high-bandwidth Gen5 NVMe* drives, this limitation becomes more significant. Following discussions on LWN, we conducted experiments to measure and improve buffered I/O performance. Measuring and improving buffered I/O [LWN.net]

Solidigm™ testing experiment result and analysis

Test Configuration

| Storage Server― Intel® gen5 BNC | |

| OS | Fedora Linux 40 (Server Edition) |

| Kernel | Linux salab-bncbeta02 6.8.5-301.fc40.x86_64 #1 SMP PREEMPT_DYNAMIC Thu Apr 11 20:00:10 UTC 2024 x86_64 GNU/Linux |

| CPU Model | Intel® gen5 BNC |

| NUMA Node(s) | 2 |

| DRAM Installed | 256GB (16x16GB DDR4 3200MT/s) |

| Huge Pages Size | 2048 kB |

| Drive Summary | 2x Gen5 TLC from Hynix SOLIDIGM SB5PH27X076T G70YG030 |

| FIO | 3.37 above or latest version |

| ./fio --name=test -numjobs=128 -ioengine=sync -iodepth=1 --direct=1 -rw=write -bs=1M --scramble_buffers=1 -size=20G -directory=/mnt/test/ --group_reporting --runtime=240 | |

| File system | XFS and Ext4 |

| Mdraid | Raid0 two gen5 NVMe SSD |

Test Result summary:

| Test | File system | Buffer IO | File write BW |

| 1 | XFS | True | 14.6 GB/s |

| 2 | XFS | False | 14.3 GB/s |

| 3 | Ext4 | True | 5383 MB/s |

| 4 | Ext4 | False | 14.5 GB/s |

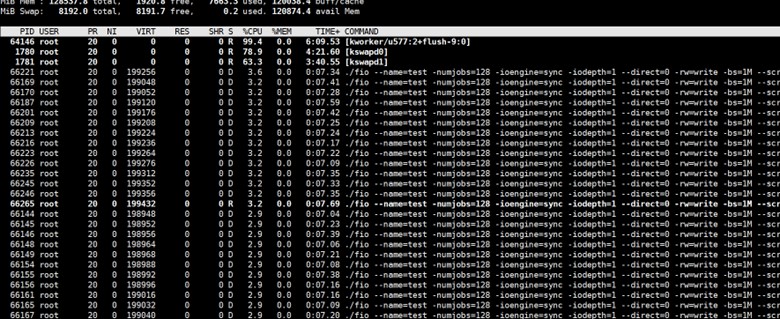

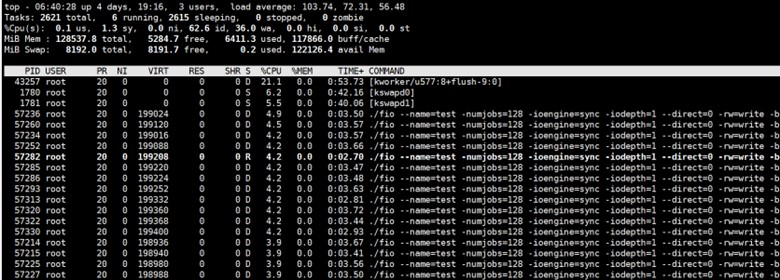

From the results, we observe that with the latest Intel® Gen5 BNC platform, Gen5 NVMe Raid0, and kernel 6.8, the 7GB/s kworker bottleneck occurs only with the Ext4 file system. The XFS file system performs well, with kworker CPU usage only at 20%. See Figure 1 and Figure 2 for detailed visual analysis.

Figure 1. Ext4 shows 100% CPU on flush kworker thread

Figure 2. XFS shows 20% CPU on flush kworker thread

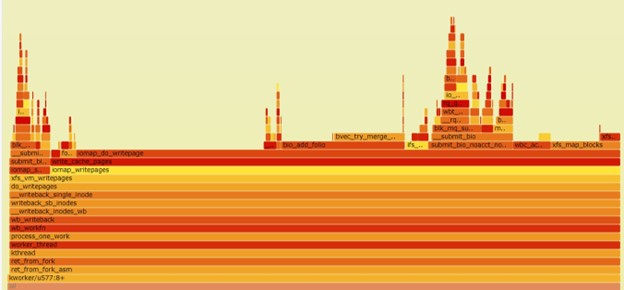

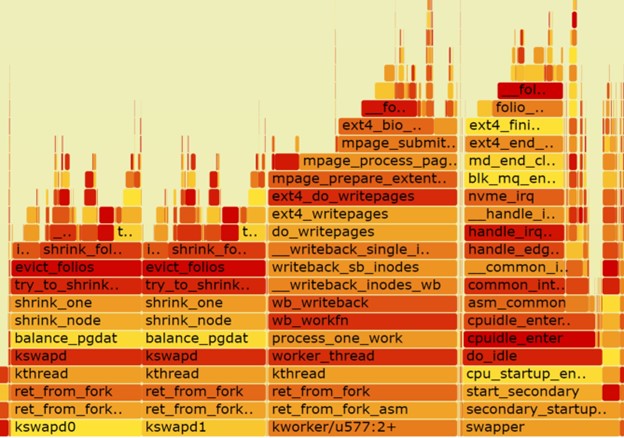

- Enflame chart analysis.

- Figure 3: XFS hot spot on iomap_do_writepage, utilizing the latest Linux kernel memory framework (folio) for better multipage memory management.

- Figure 4: Ext4 is CPU bound on kworker, finally calling into __folio_start_writeback. This suggests that XFS, the first file system to implement iomap and folio, might be more efficient, while Ext4 could benefit from further improvements.

The LWN article's conclusion stands: each file system per volume currently has only one kworker thread, even with kernel 6.8. Different file systems leverage this single kworker thread with varying efficiency, affecting bandwidth performance. For high-end NVMe configurations like Gen5 with Raid0 or Raid5, using direct I/O can provide better bandwidth and save DRAM for other cloud-native tasks.

Figure 3. XFS hot spot on iomap_do_writepage

Figure 4. ext4 flush worker is 100% hot spot kswapd is relatively high too

Conclusion

Based on discussions with top CSP kernel storage team leaders in PRC, it's clear that the new AI era demands higher bandwidth, making this a valid test case for the Linux kernel storage stack.

- Bypass buffered I/O if using high-end NVMe from Gen5.

- XFS appears more efficient with buffered I/O if needed.

- The Solidigm solution team will continue discussions with kernel FS developers for further insights and updates.

Questions? Check out our Community Forum for help.

Issues? Contact Solidigm™ Customer Support:

[Chinese] [English] [German] [Japanese] [Korean]

Success