How QLC SSDs Provide Value, Performance, and Density

Solidigm presents at Gestalt IT’s Storage Field Day

During Gestalt’s Storage Field Day in September 2023, Solidigm presented on how QLC SSDs are designed for value, performance and density. Every day, the volume of data being generated and the applications of that data are increasing. Streaming services, data mining, and machine learning are just a few examples of read-intensive workloads that demand innovative storage solutions.

This article explores the use of QLC SSDs to address changing workloads and read-intensive storage requirements. It provides an overview of the key features and capabilities of QLC SSDs, followed by an introduction to the Solidigm D5-P5336.

Changing workloads lead to changing storage needs

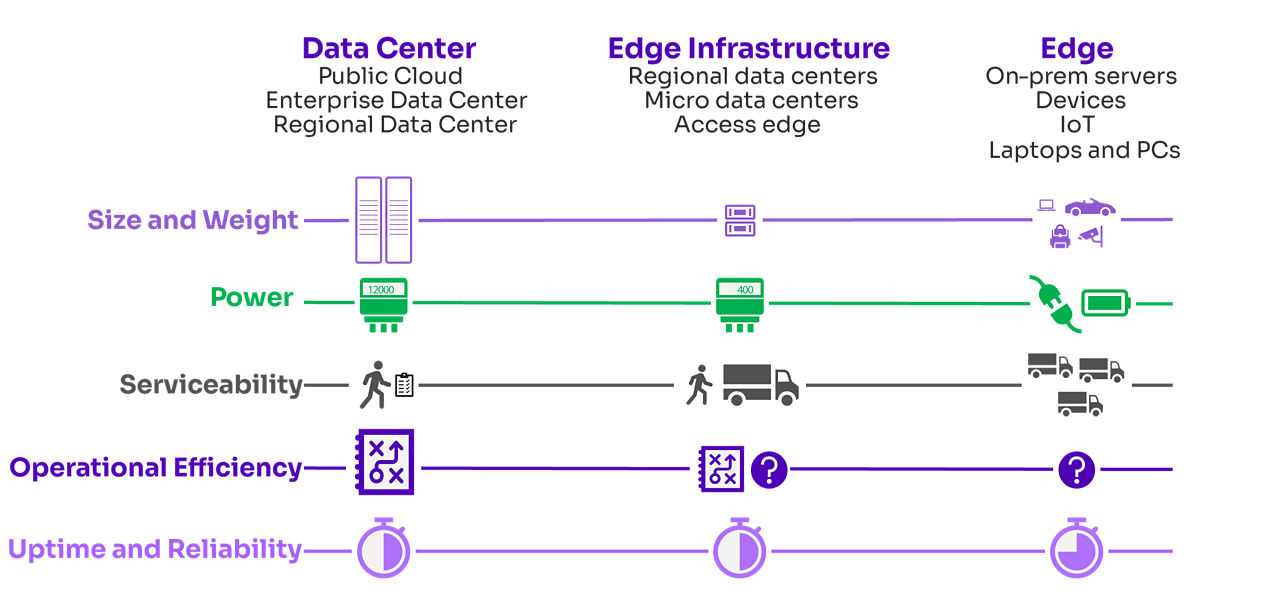

Data is changing the type of workloads involved in storage and consumption, as well as where that data is stored. For example, AI and content delivery networks (CDNs) have increased the demand for read-intensive storage on the back end, underscoring the need for high-capacity, highly dense storage. Another example is edge computing, in which data is stored as close to the source as possible. Figure 1 captures these changing applications and their requirements.

Figure 1. Overview of changing data demands related to data centers, edge infrastructure, and edge devices.

Figure 1 shows three areas in which storage requirements are evolving: data centers, edge infrastructure, and edge applications. Size and weight impact edge devices, including on-premises servers, internet of things (IoT), desktop computers, laptops, and other portable devices. As you move from data centers to edge devices, the demand grows for low-power solutions, excellent reliability, and operational efficiency in unpredictable conditions.

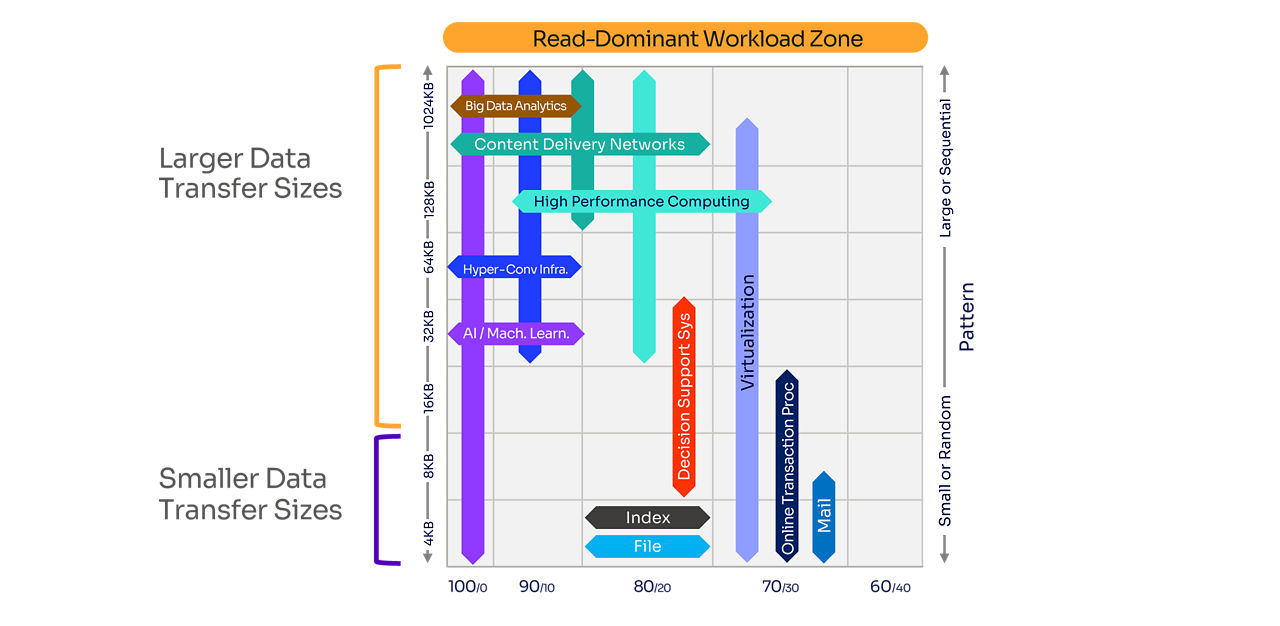

Figure 2. A plot illustrating the data needs found in modern data centers.

Many of the most common workloads in today’s data center and cloud environments are read-dominant, as Figure 2 shows. These approximate workload characterizations are based on data collected worldwide across cloud and enterprise environments. The left side of the chart shows transfer sizes from 4KB through 1MB and up. The bottom of the chart shows a mixture of read/write, while the right side shows the data pattern from small/random to large/sequential.

Critical storage needs for the kinds of applications shown in Figure 3 are:

- Read-intensive storage that is still tunable

- High-capacity storage

- High-density storage

- Scalable storage

- Reliable storage

However, other needs involve power requirements and ease of service. In the past, the primary solution to such storage needs was hard disk drives (HDDs). But new solutions, including solid-state drives (SSDs), are emerging.

SSD alternatives to traditional HDDs

For many applications, an effective alternative to HDDs is QLC NAND SSDs. QLC stands for quad-level cells with 4 bits per cell, NAND are flash memory drives that do not require power to retain data, and SSD stands for solid-state drive.

1. QLC SSDs: Increased capacity and cost-effectiveness

QLC NAND SSDs, which work exceptionally well for read-intensive workloads, have significant data capacity, greater than single-level cell (SLC), multi-level cell (MLC), and triple-level cell (TLC) NAND SSDs. In addition, QLC NAND SSDs can compete with flash options that store fewer bits per cell, because they provide high-density storage while remaining economical.

2. QLC SSDs: Lower cost per gigabyte

Another advantage is that QLC NAND SSDs offer greater capacity in the same space at a lower cost per gigabyte. In addition to being low latency, they are highly reliable when compared with HDDs, [1] partly because QLC NAND SSDs do not have moving parts (unlike traditional HDDs).

When the focus is on storing data for the long term, QLC NAND SSDs provide an excellent option for data backup and archival purposes. For these applications, QLC NAND SSDs are a strong solution because of the need to balance performance, cost, and capacity.

Applications for QLC SSD

There are numerous and varied applications for QLC SSDs. Examples include machine learning (ML) and artificial intelligence (AI), where the drive primarily captures the data, which gets pulled from the drive while analytics are processed in a different workload.

Other applications that rely on QLC SSDs include OnLine Analytics Processing (OLAP), such as data mining for retail workloads, earthquakes, and high-performance computing (HPC). There are also Financial Service Industry (FSI) workloads, hyper-converged infrastructure (HCI), and CDNs.

These applications require rapid, high-bandwidth access to data and low-latency, read-optimized performance. A new product from Solidigm meets these intensive modern storage needs.

Solidigm QLC SSDs

The Solidigm D5-P5336 is a read performance-optimized storage solution for up to 61,44TB. As the value endurance QLC SSD option from Solidigm, the D5-P5336 offers high capacity at low total cost of ownership (TCO) for read-intensive workloads.

In supporting read-intensive storage, the D5-P5336 can read at 7,000 Mbps and write at 3,300 Mbps and is tunable with software to meet various workload needs more effectively. It has an excellent latency under load, a low error rate, and endurance on the order of 3,000 P/E cycles. [2]

- E3.5, covering a capacity range of 7.68TB to 30.72TB

- U.2, with a capacity range of 7.68TB to 61.44 TB

- E1.L, with capacities ranging from 15.35TB to 61.44TB

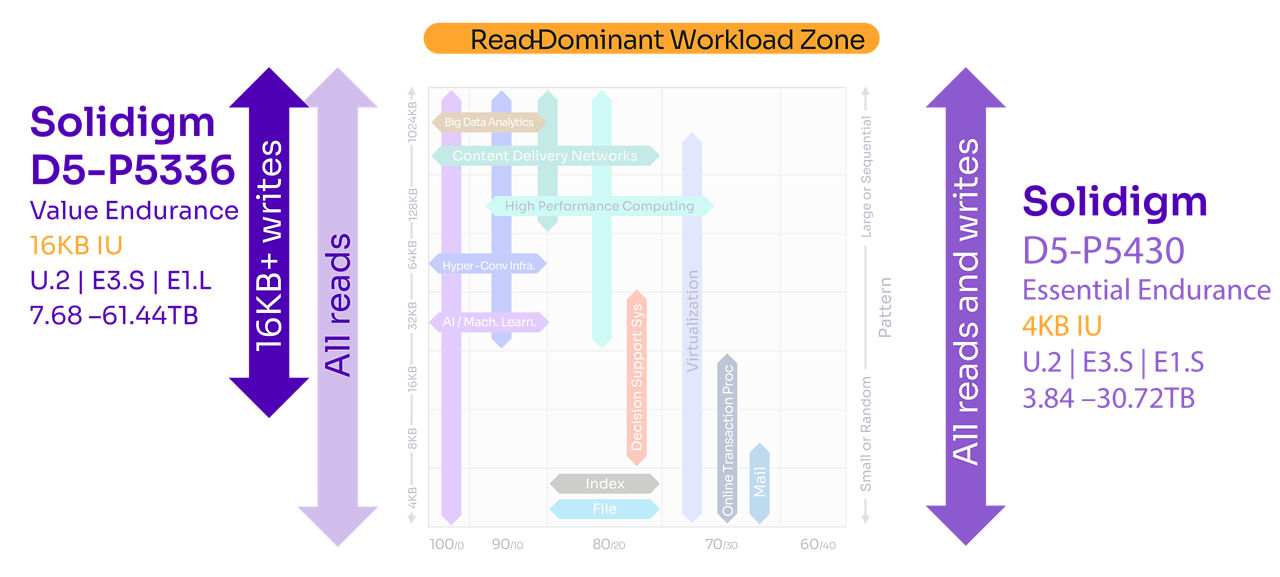

Revisiting Figure 2, Figure 3 shows where the Solidigm D5-P5336 provides optimal performance in read-dominant workloads.

Figure 3. A plot illustrating the data needs found in modern data centers and where the Solidigm D5-P5336 fits best.

This QLC SSD solution stores more data in a smaller footprint with faster access while offering massive scalability and extremely high data density. [3] This also makes these SSDs highly scalable. For example, a Solidigm D5-P5336 with twenty-four 61.4TB drives offers a total server capacity of 1.47PB.

With a reliable storage infrastructure built on Solidigm SSDs, Taboola is able to scale its recommendation engine business even further with confidence that the storage will be there when it’s needed.

Pisetsky continues, “The high reliability of these SSDs in Taboola’s decentralized, hyperconverged storage architecture keeps maintenance costs in check.”

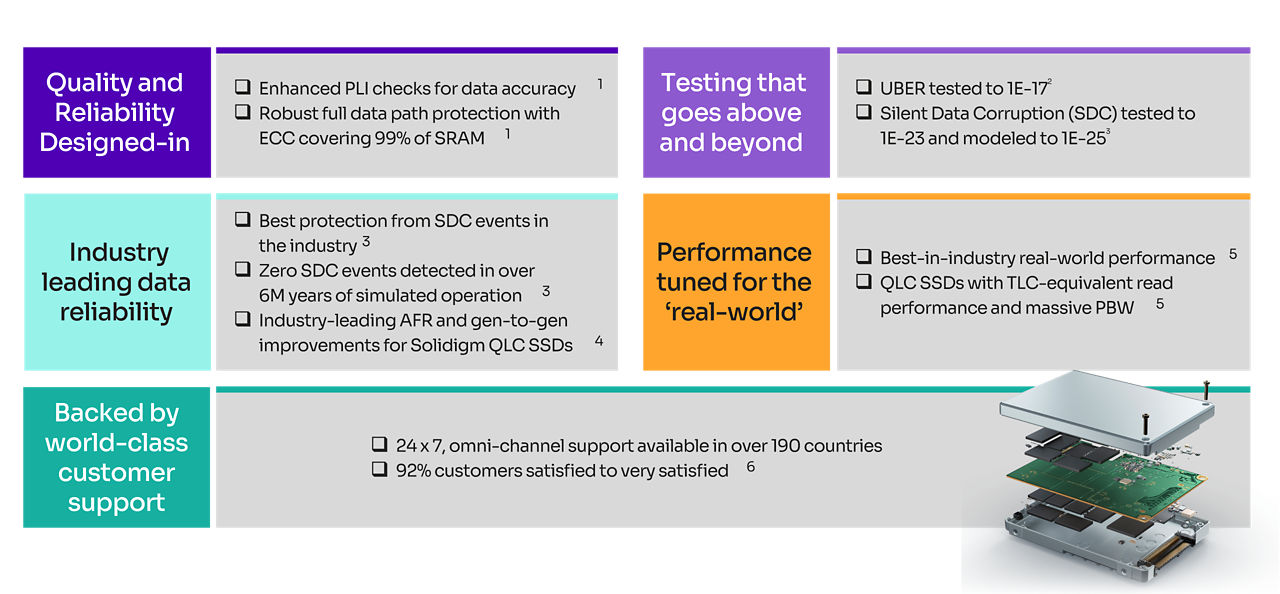

Reliability under intense testing

Finally, the reliability of the Solidigm D5-P5336 is summarized in Figure 4. Note the intense testing that takes place and the industry-leading data reliability achieved by this QLC SSD.

Figure 4. The quality and reliability built into Solidigm D5-P5336.

Highest-capacity PCIe SSD

The Solidigm D5-P5336 is the world’s highest-capacity PCIe 4.0 SSD, [2] with key features, such as the ability to accelerate data in widely adopted, read and data-intensive workloads; massive scalability for high-density storage environments; [2] and substantially improved total cost of ownership and sustainability in hyper-scale environments. [2,4]

For more information on these topics watch the full Gestalt Storage Field Day videos by clicking this link.

Notes

[2] https://www.solidigm.com/products/technology/d5-p5336-product-brief.html

[3] “Up to 20x reduction of warm storage footprint” claim is based on comparing 4TB HDDs, which require 10 (2U) of rack space to fill up 1PB or storage, against 30.72TB Solidigm SSD D5-5336 E1.L or U.2 drives, which take 1U of rack space to fill up 1PB of storage. That’s up to 20x greater rack consolidation.

[4] https://estimator.solidigm.com/ssdtco/index.htm

Figure 4 Notes

- End-to-end data protection. Source – Solidigm. Enhanced Power Loss Imminent – Additional firmware check validates data is saved accurately upon power restoration. Unclear if others provide this additional firmware check. Robust End-to-End Data Protection – Built-in redundancy where both ECC and CRC can be active at the same time. Protecting all critical storage arrays within the controller – instruction cache, data cache, indirection buffers and phy buffers. ECC coverage of SRAM to over 99% of array is among the highest in the industry.

- UBER testing. Source – Solidigm. Uncorrectable Bit Error Rate (UBER) - tested to 10X higher than JEDEC spec. Solidigm tests to 1E-17 under full range of conditions and cycle counts throughout life of the drive which is 10X higher than 1E-16 specified in JEDEC – Solid State Drive Requirements and Endurance Test Method (JESD218). https://www.jedec.org/standards-documents/focus/flash/solid-state-drives. Silent Data Corruption (SDC) - modeled to 1E-25. Typical Reliability Demonstration Test involve 1K SSDs for 1K hrs to model to 1E-18. Solidigm drives are tested at the neutron source at Los Alamos National Labs to measure SDC susceptibility to 1E-23 and modeled to 1E-25.

- SDC resistance. Source – Solidigm. Drives are tested at the neutron source at Los Alamos National Labs to measure Silent Data Corruption susceptibility to 1E-23 and modeled to 1E-25. Test prefills drives with a certain data pattern. Next, the neutron beam is focused on the center of the drive controller while IO commands are continuously issued and checked for accuracy. If the drive fails and hangs/bricks, the test script powers down the drives and the neutron beam. The drive is subsequently rebooted, and data integrity is checked to analyze the cause of failure. SDC can be observed during run time causing a power down command or after reboot if the neutron beam has hit the control logic hanging the drive as a result of inflight data corruption. Because drives go into a disable logical (brick) state when they cannot guarantee data integrity, brick AFR is used as the measure of error handling effectiveness. Solidigm drives have used this testing procedure across 4 generations. Cumulative testing time across generations is the equivalent of over 6M years of operational life in which zero SDC errors have been detected. The most recent testing used the Solidigm D5-P5520 drives which served as a proxy for the Solidigm D5-P5430 drives since they share the same controller and similar firmware. Competitor drives tested were the Samsung 983 ZET, Samsung PM9A3, Samsung PM1733, Micron 7400, Micron 7450, Kioxia XD6, Toshiba XD5 and, WD SN840.

- Industry-leading AFR: Source – Solidigm AFR data as of Mar 2023. Annual Failure Rate (AFR) is defined by Solidigm as customer returns less units which upon evaluation are found to be fully functional and ready for use.

- Real-world Performance. Source: Solidigm. See Appendix – D5-P5336 Performance Tests for details.

- Customer support satisfaction. Source – Solidigm. Based on analysis of all 2021 call center tickets.

About the Author

Jeniece Wnorowski, Product Marketing Manager at Solidigm, has over 14 years of experience in data center storage solutions. Jeniece got her start in technical marketing at Intel Corporation, then joined Solidigm where she continues to evangelize data center SSD innovations with a variety of companies and partners. Outside of work, Jeniece enjoys spending time with her kids, training for jiu jitsu, and exploring the outdoors.