Inference Context Memory Storage Platform (ICMSP): Why AI inference is becoming a flash problem

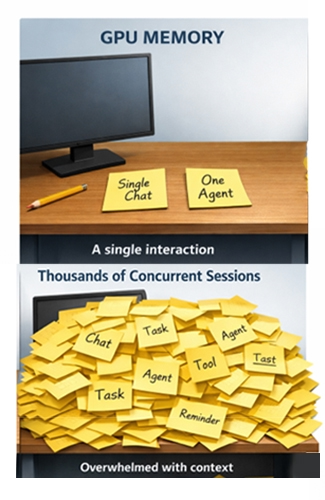

Inference is hitting a new memory wall as modern AI systems carry far more context than they were designed to hold near the processor. With AI shifting from one-shot prompts to long-running conversations and agentic workflows, the memory footprint of inference is expanding rapidly. This exposes a new bottleneck in the infrastructure stack.

Picture a rack of GPUs serving thousands of conversational applications, assistants, and autonomous agents at once. Each interaction may look lightweight on its own, but together they create a growing accumulation of “working memory” that must be preserved to maintain coherent, efficient responses.

That working memory lives in the key–value (KV) cache, which stores previously computed key/value pairs for reuse during generation to avoid redundant computation.1

When that context no longer fits in the memory tiers closest to the GPU, performance degrades. Latency rises. Throughput drops. And some of the most expensive hardware in the data center begins to sit idle.

As introduced by NVIDIA CEO Jensen Huang during NVIDIA Live at CES 2026, NVIDIA’s Inference Context Memory Storage Platform (ICMSP, or shortened “ICMS”) positions a pod-level, flash-backed context tier as an AI-native KV-cache layer that can be shared and reused at rack scale.2,3,4

The inference memory wall

For much of the past decade, AI infrastructure discussions have focused on feeding GPUs. As inference workloads evolve, that focus expands to include how context accumulates over time. As models grow more capable, inference increasingly retains state rather than discarding it.

KV cache is essential for performance, but it grows along multiple dimensions at once:

- Longer context windows increase the size of each session’s cache.

- Higher concurrency multiplies the total footprint across users.

- Agentic workflows extend session lifetimes, keeping context active far longer than a single prompt–response exchange.

The result is that an inference service can become memory-limited long before it becomes compute-limited. Even when GPUs have available cycles, they may stall while waiting for context to be retrieved or recomputed. This effect is most visible under real-world conditions, where tail latency matters and workloads fluctuate dynamically.

One common approach is to push context into shared storage systems that already exist in the data center. For active inference, this introduces new latency and traffic challenges. Traditional file systems and object stores are optimized for durability and sharing, not for latency-sensitive reads that sit directly on the critical path of token generation. As KV traffic moves north–south across the network, latency becomes less predictable and congestion increases.

KV cache requires storage-scale capacity alongside memory-like responsiveness. NVIDIA’s ICMS/ICMSP concept is aimed squarely at that gap.3,4

The G3.5 context tier in NVIDIA’s inference memory hierarchy

NVIDIA describes the Inference Context Memory Storage platform as a fully integrated storage infrastructure that uses the NVIDIA BlueField-4 data processor to create a purpose-built context memory tier operating at the pod level, intended to bridge the gap between high-speed GPU memory and scalable shared storage. 3,4

In NVIDIA’s technical description, this introduces a new “G3.5” layer: an Ethernet-attached flash tier optimized specifically for KV cache, designed to be large enough for shared, evolving, multi-turn agent context, and close enough for frequent pre-staging back into GPU/host memory without stalling decode. 3

In practice, NVIDIA positions ICMS/ICMSP as a rack-/pod-local flash tier optimized for derived inference state (ephemeral by nature). Context blocks can be stored, reused, and orchestrated across services without rematerializing the same history independently on each node, and then staged back upward when needed. 3

Figure 1. ICMSP new tier for active reference

Figure 1. ICMSP new tier for active reference

Conceptually, this creates a middle tier between GPU HBM and the data lake. It does not replace existing storage systems, but it changes how and where active inference state is handled.

A segmentation model for inference infrastructure

One way to understand ICMS/ICMSP is to view inference infrastructure as three distinct zones:

Compute node

Closest to the GPU is the compute node, which holds the immediate working set in HBM, system memory, and local SSD. This is where tokens are generated and latency sensitivity is highest.

Pod-local context tier

Alongside it sits the ICMS/ICMSP pod-local context tier. This layer holds inference state that is too large to remain permanently in near-GPU memory but still needs to be accessed quickly and predictably. NVIDIA describes this as a pod-level context tier with predictible, low-latency, RDMA-based access characteristics when paired with Spectrum-X Ethernet. 3,4

Data lake

Beyond the pod lies the data lake, which remains the durable system of record for models, datasets, logs, and artifacts. This tier is essential but it is not designed for per-token responsiveness. ICMS/ICMSP complements the data lake by reducing the pressure to push latency-sensitive KV data into storage infrastructure designed primarily for durability. 3

Supporting this model requires more than capacity alone; it requires a way to manage inference context efficiently at pod scale.

Figure 2. GPU pod KV cache

Figure 2. GPU pod KV cache

The role of DPUs in ICMS/ICMSP

ICMS/ICMSP extends beyond adding SSD capacity by introducing a dedicated processing layer for inference context. NVIDIA’s published materials emphasize that the platform is powered by the NVIDIA BueField-4 data processor and is paired with Spectrum-X Ethernet to support efficient, RDMA-based access to KV cache data.3,4

NVIDIA also claims that BlueField-4 enables hardware-accelerated KV cache placement that reduces metadata overhead and data movement, while Spectrum-X Ethernet serves as the high-performance fabric for access to AI-native KV cache.4

Taken together, the architectural choices NVIDIA describes are designed to keep context handling closer to the GPUs and more deterministic, reducing stalls and time spent waiting on context under inference load.

A new way to size inference memory

One of the most significant implications of ICMS/ICMSP is how capacity planning evolves for inference infrastructure. Inference context becomes a rack-scale/pod-scale resource rather than a fixed amount of memory attached to each accelerator.

In NVIDIA’s technical description, the G3.5 tier provides petabytes of shared capacity per GPU pod for inference context, extending the hierarchy beyond HBM/DRAM/local SSD while remaining materially closer (and lower-latency) than traditional shared storage.3

This introduces a new “flash multiplier” for inference. GPU deployments increasingly drive demand for high-density, power-efficient SSDs deployed inside (or immediately adjacent to) the pod.

What ICMSP-class systems look like

Early ICMSP-class systems resemble familiar storage build blocks but repurposed. The “new purpose” is not the components themselves, but the requirement to serve KV-cache context with memory-like responsiveness and predictable behavior under inference load, rather than serving files/objects for general applications.3,4

- Dense NVMe shelves provide large, pooled flash capacity.

- DPUs (as NVIDIA describes for its implementation: BlueField-4) front those pools, handling context placement and data movement functions.3,4

- High-bandwidth, low-latency Ethernet (NVIDIA highlights Spectrum-X Ethernet) connects the tier to the rest of the pod.3,4

From a hardware perspective, this looks like well-understood infrastructure, even as its role changes. Instead of serving files or objects, the system stores and serves derived inference context with predictable behavior under load.

Workloads driving the need for context memory

The demand for ICMS/ICMSP is being driven by the same workloads that are shaping modern inference. Agentic systems operate in loops—observing, planning, acting, and reflecting—keeping context alive far longer than a single response. Long-context inference grows KV cache even when models themselves fit comfortably in GPU memory. High-concurrency deployments make tail behavior and predictability as important as raw throughput.

In these environments, context becomes a first-order capacity consideration.

What ICMSP means for SSD requirements

Treating flash as context memory reshapes what buyers optimize for. Density becomes critical because pod real estate is limited and context scales with GPU count. Power efficiency matters because AI factories are constrained not just by space, but by watts. Predictable latency and quality of service matter because slow reads translate directly into idle GPUs.

Endurance must also align with how context behaves. Some workloads reuse KV heavily, while others churn aggressively as sessions are created and evicted. In inference architectures that rely on external KV cache tiers, SSD behavior under sustained, real-world conditions matters more than peak benchmark performance.

Where Solidigm fits in the context offload era

For Solidigm™, the emergence of rack-local context tiers reinforces a simple idea: As inference becomes more persistent and multi-turn, high-capacity flash is moving closer to keep context accessible, predictable, and cost-efficient at scale.

Solidigm supports this shift with SSDs that map to two ends of the design space.

- Maximum performance where latency headroom is tight: Solidigm™ D7-PS1010 is a PCIe Gen5 SSD option designed for high throughput and real-world IO conditions.

- Maximum density where terabytes-per-rack dominates: Solidigm™ D5-P5336 SSD is positioned for very high capacity (including configurations up to 122TB) to maximize density in constrained rack/power envelopes.

As requirements mature, the right SSD choice will depend on how much of the KV lifecycle is dominated by reuse versus churn, and how tightly latency requirements are enforced at scale.

The value in dollars and watts

NVIDIA has publicly attached concrete performance/efficiency claims to the ICMS/ICMSP concept—e.g., up to 5x tokens-per-second and up to 5x power efficiency improvements versus “traditional storage” in its announcement materials (baseline/workload-dependent and presented in NVIDIA’s claims). 2,4

In practice, the value of a context tier shows up when it reduces stalls, improves utilization, and increases concurrency at a fixed latency target. Those effects translate directly into lower cost per delivered token because GPU time is far more expensive than storage capacity.

The bigger picture

ICMS/ICMSP signals where AI infrastructure is heading: Toward rack-scale systems where compute, networking, and storage are co-designed around inference efficiency. As inference becomes more persistent and agentic, the central challenge becomes how gracefully systems handle context as it grows beyond near-GPU memory.

Increasingly, the answer is to place flash where memory runs out and keep it close enough to behave like part of the pod. As that pattern spreads, storage becomes one of the primary levers shaping the economics of AI inference.

In this environment, storage shifts from passive capacity to active infrastructure. Selecting flash for inference context requires careful attention to density, power efficiency, and predictable behavior under sustained, real-world load.

Solidigm works with AI infrastructure builders to align flash technology with these emerging requirements, helping keep inference context accessible, efficient, and cost-effective as inference scales. Learn more at www.solidigm.com.

About the Author

Jeff Harthorn is Marketing Analyst for AI Data Infrastructure at Solidigm. Jeff brings hands-on experience in solutions architecture, product planning, and marketing. He shapes corporate AI messaging, including competitive studies on liquid-cooled E1.S SSDs, translating geek-level detail into crisp business values for our customers and collaborative partners. Jeff holds a Bachelor of Science in Computer Engineering from California State University, Sacramento.

Sources

- NVIDIA TensorRT-LLM Docs (KV cache definition / behavior): https://nvidia.github.io/TensorRT-LLM/latest/features/kvcache.html

- NVIDIA Blog (CES recap, Jan 5, 2026): “NVIDIA Rubin Platform, Open Models, Autonomous Driving…” https://blogs.nvidia.com/blog/2026-ces-special-presentation/

- NVIDIA Technical Blog (Jan 6, 2026): “Introducing NVIDIA BlueField-4-Powered Inference Context Memory Storage Platform…” https://developer.nvidia.com/blog/introducing-nvidia-bluefield-4-powered-inference-context-memory-storage-platform-for-the-next-frontier-of-ai/

- NVIDIA Newsroom / Press Release (Jan 5, 2026): “NVIDIA BlueField-4 Powers New Class of AI-Native Storage Infrastructure…” https://nvidianews.nvidia.com/news/nvidia-bluefield-4-powers-new-class-of-ai-native-storage-infrastructure-for-the-next-frontier-of-ai